Featured

Table of Contents

- – Integrating External Systems alongside Unique ...

- – The Strategic Value for Bespoke against Standa...

- – Establishing Credibility plus Results through...

- – Exploring the Advancement of Online Retail: D...

- – How Establishes a Winning Shopping Experienc...

- – Exploring the Integration of Creative Direct...

It isn't a marathon that requires study, examination, and testing to determine the function of AI in your business and guarantee safe and secure, ethical, and ROI-driven remedy release. It covers the crucial considerations, challenges, and aspects of the AI job cycle.

Your objective is to identify its role in your procedures. The easiest means to approach this is by going backwards from your goal(s): What do you desire to accomplish with AI implementation?

Integrating External Systems alongside Unique Digital Solutions

Look for out usage situations where you have actually currently seen a convincing presentation of the modern technology's potential. In the financing market, AI has verified its advantage for scams discovery. Machine learning and deep knowing designs surpass traditional rules-based fraudulence discovery systems by supplying a lower price of false positives and revealing better outcomes in identifying brand-new sorts of fraudulence.

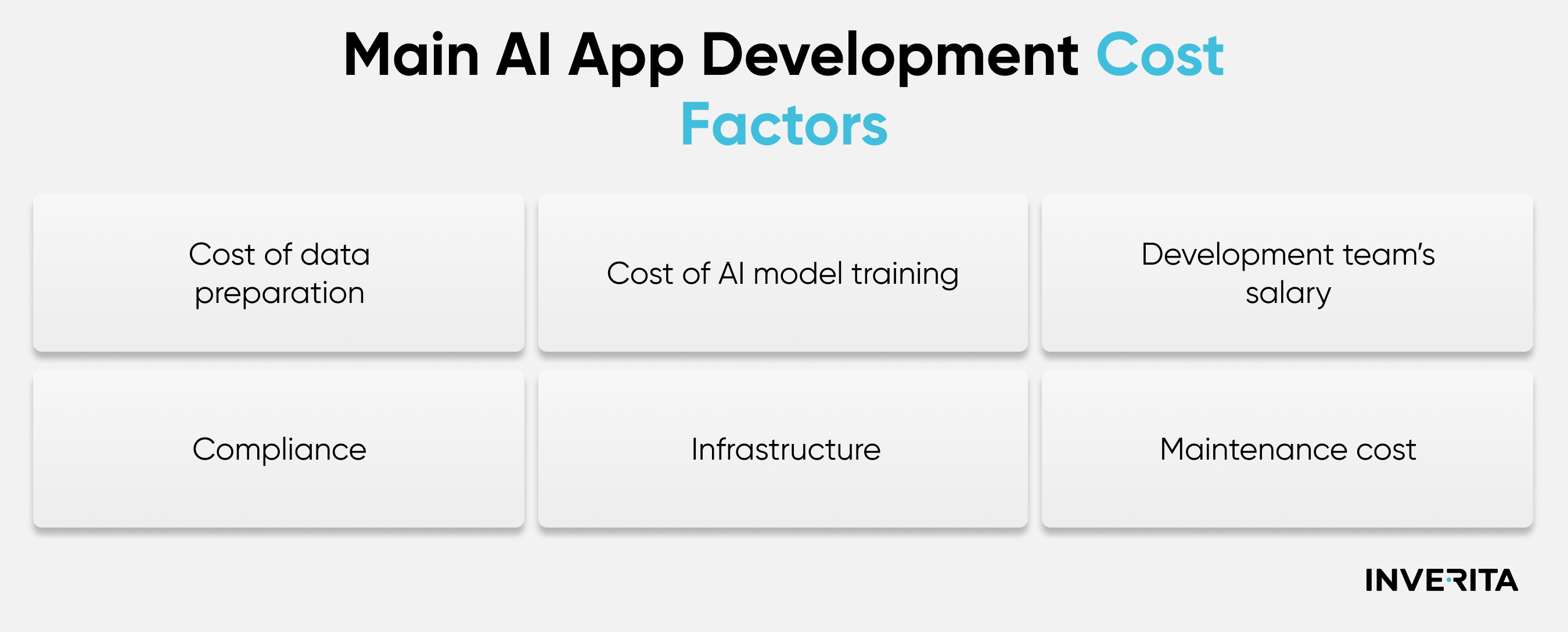

Researchers concur that synthetic datasets can boost privacy and representation in AI, especially in sensitive industries like medical care or finance. Gartner anticipates that by 2024, as much as 60% of data for AI will certainly be synthetic. All the gotten training information will certainly then have to be pre-cleansed and cataloged. Use consistent taxonomy to establish clear data family tree and then keep track of exactly how different customers and systems utilize the provided information.

The Strategic Value for Bespoke against Standard Web Properties

In enhancement, you'll have to separate offered information into training, validation, and examination datasets to benchmark the developed version. Fully grown AI growth teams total most of the information management processes with information pipes an automatic series of steps for information ingestion, processing, storage, and succeeding gain access to by AI designs. This, in turn, made information a lot more easily accessible for thousands of simultaneous users and device learning jobs.

Establishing Credibility plus Results through Intentional Development Strategies

The training process is intricate, too, and vulnerable to concerns like sample effectiveness, stability of training, and catastrophic interference issues, among others. By making use of a pre-trained, fine-tuned version, you can quickly educate a new-gen AI algorithm.

Unlike standard ML frameworks for all-natural language processing, foundation designs need smaller sized labeled datasets as they currently have actually installed knowledge throughout pre-training. That claimed, structure versions can still produce inaccurate and inconsistent outcomes. Especially when used to domain names or jobs that vary from their training information. Educating a structure model from the ground up additionally calls for enormous computational sources.

Exploring the Advancement of Online Retail: Developments plus Outlooks

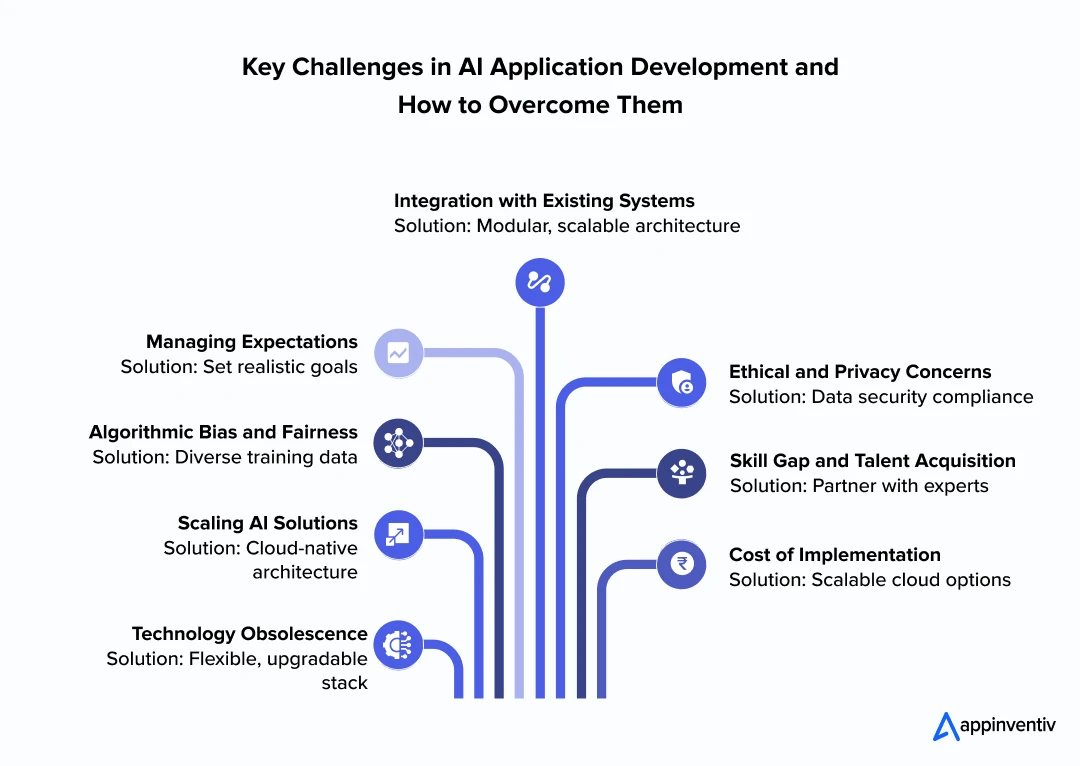

occurs when model training conditions differ from release conditions. Properly, the design doesn't create the desired results in the target atmosphere due to distinctions in parameters or setups. occurs when the statistical homes of the input information alter over time, affecting the design's performance. For example, if the model dynamically optimizes costs based on the complete number of orders and conversion prices, however these criteria considerably transform with time, it will no much longer supply precise pointers.

Instead, most keep a data source of design variations and do interactive version training to progressively boost the top quality of the final product. Usually, AI programmers rack about 80% of produced versions, and only 11% are successfully deployed to production. is just one of the vital methods for training much better AI designs.

You benchmark the communications to determine the design variation with the greatest accuracy. A design with also couple of features has a hard time to adapt to variants in the information, while also numerous attributes can lead to overfitting and worse generalization.

How Establishes a Winning Shopping Experience in 2024

But it's likewise the most error-prone one. Only 32% of ML projectsincluding revitalizing models for existing deploymentstypically get to implementation. Release success throughout various equipment finding out projectsThe reasons for failed releases differ from absence of executive assistance for the task due to vague ROI to technical troubles with ensuring stable design operations under boosted loads.

The group needed to make sure that the ML design was very offered and served highly customized referrals from the titles readily available on the customer device and do so for the system's countless individuals. To guarantee high efficiency, the team decided to program design racking up offline and afterwards serve the results once the user logs into their device.

Exploring the Integration of Creative Direction with Development in Today's Web Applications

It also aided the company optimize cloud framework costs. Ultimately, effective AI version implementations come down to having efficient processes. Much like DevOps concepts of continuous assimilation (CI) and continual shipment (CD) enhance the deployment of routine software application, MLOps raises the rate, effectiveness, and predictability of AI model deployments. MLOps is a collection of actions and devices AI development groups make use of to develop a consecutive, automatic pipeline for releasing brand-new AI services.

Table of Contents

- – Integrating External Systems alongside Unique ...

- – The Strategic Value for Bespoke against Standa...

- – Establishing Credibility plus Results through...

- – Exploring the Advancement of Online Retail: D...

- – How Establishes a Winning Shopping Experienc...

- – Exploring the Integration of Creative Direct...

Latest Posts

The Significance of Local Body shop for Counseling Practices

Combining Social Media with Your [a:specialty] Car paint Approach

Tracking the [a:specialty] Client Choice Process

More

Latest Posts

The Significance of Local Body shop for Counseling Practices

Combining Social Media with Your [a:specialty] Car paint Approach

Tracking the [a:specialty] Client Choice Process